When you’re processing thousands, or even millions, of customer records, speed without structure is a liability. Most financial services providers and insurers depend on data bureau batch processes to drive bulk KYC, AML, and risk checks.

This data is typically sourced from multiple systems, such as credit bureaus, KYC providers, and AML databases, but it rarely aligns neatly. Differences in format, structure, and completeness can result in mismatched records, delays, and errors that undermine the entire batch process.

That’s the core challenge of managing multi-source batch data at scale: not just collecting it, but aligning, treating, and preparing it for instant ingestion into downstream systems. If your output file needs heavy manual cleanup, treatment or repeatedly fails ingestion rules, the real issue is likely upstream.

Clean, treated batch data is about building a pipeline that intelligently ingests inconsistent records, treats them with logic and rule-based validation, and outputs one structured file, ready for action.

Why multi-source data gets messy fast

Data quality is only as strong as its weakest source. And most businesses dealing with batch files are working with several:

- Account origination databases

- Loan origination or legacy systems with outdated records

- Third-party bureau, often with mis matched data formats

- Internal tools with custom structures

- Local and international KYC data sources

- Complex international AML data sources

When this multi-source batch data is fed into a standard process, the results are predictably chaotic: duplicates, formatting mismatches, conflicting ID numbers, and missing fields. Suddenly, what should have been a one-click upload becomes a multi-team fire drill.

The hidden costs of poor multi-source batch data treatment

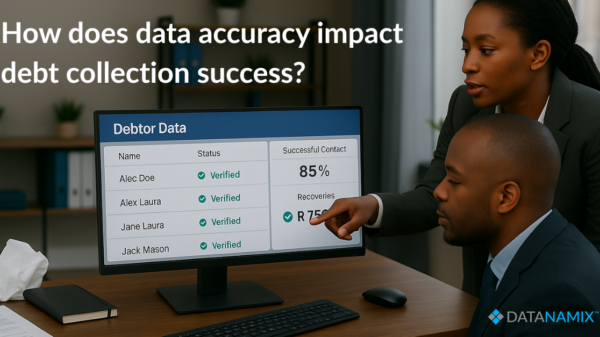

Legacy systems treat incoming batch data equally, failing to account for its origin or quality. That means a perfectly valid ID from Bureau A might be flagged as a mismatch simply because System B writes it differently. Multiply this across names, addresses, phone numbers, and income brackets, and the cleanup work becomes unsustainable.

That’s why batch treatment is the key differentiator. When done right, it allows you to align data from multiple sources into a single, ingestion-ready format, without manual intervention.

Use case: financial institutions running bulk KYC and AML

Picture a South African insurer needing to rerun FICA validations on 500,000 policyholders using both internal records and third-party sources. Ingesting that multi-source batch data directly would cause instant failures. Duplicate IDs, unaligned addresses, and incomplete contact details would tank ingestion and trigger non-compliance risk.

With the right system in place, each record can be:

- Cleaned and deduplicated

- Validated against formatting rules

- Reconciled using logic across sources

- Merged into one structured output file

The result: One file, ready for ingestion. No patchwork cleanup. No delays. No compliance flags.

What scalable batch treatment should include

When handling multi-source batch data at scale, your system should be able to:

- Apply cleansing rules at field level (names, IDs, emails, addresses)

- Detect and suppress duplicates across datasets

- Standardise formats for ingestion compatibility

- Flag and log inconsistencies for review

- Output a clean, unified file matched to ingestion specs

Anything less, and you’re not just wasting time — you’re exposing your business to risk.

What clean batch outputs unlock for your team

When the treatment happens upfront, everything downstream runs smoother:

- Ops teams no longer have to clean files manually

- Risk and compliance teams get audit — friendly reports

- IT and credit teams stop troubleshooting broken files

- Customer onboarding times shrink

It’s operational efficiency and reputational protection.

How Datanamix helps with multi-source batch data at scale

Datanamix is purpose-built to solve the exact problem of processing multi-source batch data at scale. Our platform ingests data from multiple sources — whether internal systems, bureaus, CRMs, or legacy platforms — then intelligently cleans, validates, and aligns each record using advanced rules and AI logic.

The result? One structured, ingestion-ready output file that meets your downstream system requirements. No duplicate headaches. No formatting chaos. No more manual patchwork.

We serve financial service providers, insurers, telcos, and any organisation needing to onboard or verify customers in bulk. From FICA and AML to bureau scoring and segmentation, Datanamix makes it easy to treat multi-source batch data at scale, with speed and accuracy you can trust.

Clean data in. Clean file out. Fewer risks, faster results.

Need to process batch files from multiple sources with confidence?

Let us show you how Datanamix simplifies multi-source batch data at scale, so you can focus on action, not admin.